In this post, we shall learn basic Docker concepts for beginners.

It Works on My Machine:

“It works on my machine. Why it does not work the same way on your environment” … This is the frequent phrase we, the technology engineers, hear in our day to day work life. Essentially, what worked perfectly fine under development environment does not work the same way either in QA environment or production environment. Why? The most probable reason is the “The Matrix of Hell” …

The Matrix of Hell:

A traditional end to end application stack can be visualized as below. We have a webserver (ex. Node JS), Database (ex. Mongo DB), Messaging (ex. Redis) and Orchestration (ex. Ansible) communicate with respective libraries and dependencies hosted on OS and hardware infrastructure.

We come across below issues developing the applications with all these different components.

- Their compatibility with the underlying operating system: We must ensure that all these different services are compatible with the version of the operating system, we are planning to use. Most of the times, when certain versions of these services are not compatible with the OS, then we must go back and look for another OS that is compatible with all these different services.

- We must check the compatibility between these services and libraries and dependencies on the OS and we may have issues where one service requires one version of dependent library, where as another service required another version. The architecture of the application may change over time. In those situations, we need to upgrade to newer versions of these components or change the database etc. and every time something gets changed, we have to go through the same process of checking compatibility between these various components and the underlying infrastructure.

These compatibility issues are usually referred to as the matrix of of Hell…

Not just this, every time, we have a new developer on board, we may find it very difficult to setup a new environment. The new developers must follow a large set of instructions and run hundreds of commands to finally set up their environments. They have to make sure that they are using the right operating system, the right versions of each of these components and each developer had to set all that up by himself each time.

We also have different development, test, and production environments. One developer may be comfortable using one OS and the others may be using another one and so we cannot guarantee that the application that we are building will run in the same way in different environments and so all of this made our life in developing, building, and shipping the application really difficult.

Docker: So we need something that can help us resolving these compatibility issues. We need something that will allow us to modify or change these components without affecting the other components and even modify the underlying operating system as required. This will take us to land on docker.

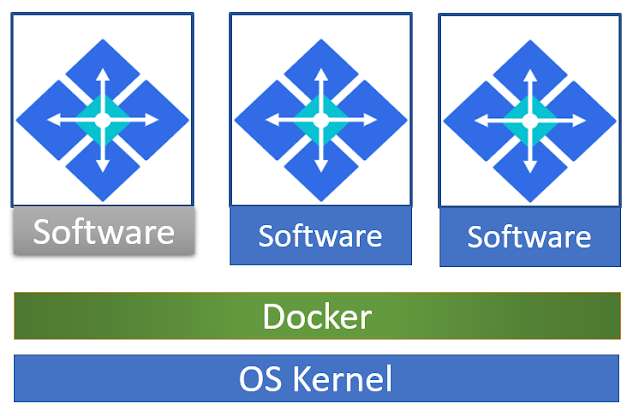

With docker, we can run each component in separate container with its own libraries and own dependencies – all on the same VM and the OS, but within separate environments or containers. We just had to build the docker configuration once and all our developers could now get started with a simple docker run command irrespective of what the underlying operating system they run; all they needed to do was to make sure that they had docker installed on their systems.

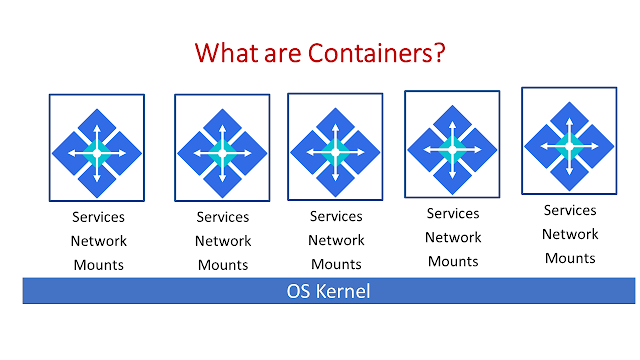

So what are containers?

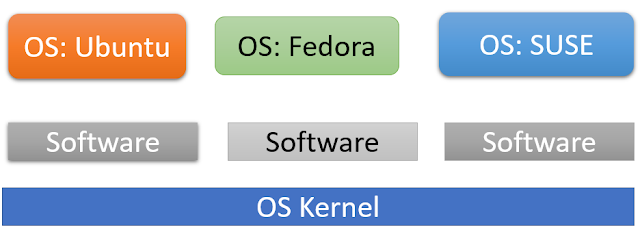

To understand how docker works, let us revisit some basic concepts of operating systems first.

OS Kernel is responsible for interacting with underlying hardware, while the OS kernel remains the same (Let us take Linux as OS Kernel in this case). It is the software above the OS Kernel that makes these Operating Systems different. This software may consist of a different user interface, drivers, compilers, file managers, developer tools etc. So, you have a common Linux Kernel shared across all Operating Systems and some custom software that differentiates operating systems from each other.

Dockers share underlying containers that share the same Kernel:

So What if, an OS that do not share the same Kernel as this? Let us take Windows that do not share the same Kernel as that of Linux. So you won’t be able to run windows-based container on a docker host with Linux OS on it. For that you would require a docker on a windows Server.

You might ask is it not a disadvantage then? Not being able to run another kernel on the OS? The answer is “No” because unlike hypervisors, docker is not meant to virtualize and run different operating systems and kernels on the same hardware. The main purpose of docker is to containerize applications and to ship them and run them.

A container runtime provides an abstraction layer that allows applications to be self-contained and all applications and dependencies to be independent of each other.

To Summarize:

Basic Docker concepts can be simplified using below three basic concepts.

- Containers

- Images

- Registries

1. Containers: A container is what you eventually want to run and host in Docker. You can think of it as an isolated machine, or a virtual machine if you prefer.

From a conceptual point of view, a container runs inside the Docker host isolated from the other containers and even the host OS. It cannot see the other containers, physical storage, or get incoming connections unless you explicitly state that it can. It contains everything it needs to run: OS, packages, runtimes, files, environment variables, standard input, and output.

2. Images: Any container that runs is created from an image. An image describes everything that is needed to create a container; it’s a template for containers. You may create as many containers as needed from a single image.

3. Registries: Images are stored in a registry. Each container lives its own life, and they both share a common root: their image from the registry.